Website NOT in Google Search Results? Check robots.txt

Introduction: You have launched your website several days ago but you still could not find your website in Google search. You can access it by typing the domain in the URL bar. What’s wrong? It might by your robots.txt. But first, you should check whether your website has been indexed by Google.

Article Highlights

Check if your website is indexed by Google

Type the search query “site:yourdomain“. For example for my website, I will type site:edward-designer.com

If the search gives zero results, there is high probability that your robots.txt is configured incorrectly.

What is robots.txt?

robots.txt is a very small configuration file residing in the web root of your domain which has the ultimate power to control whether your website will appear in search engine results pages. It tells search engines to whether crawl and index your website (or part of your website) or not. If the search query “site:yourdomain.com” returns no results but you can access the website through yourdomain.com, there is a high probability that you have placed an “disallow all” command to your website in the robots.txt file:

User-agent: *

Disallow: /

According to Google, the robots.txt is discovered by Google and cached for up to a day:

A robots.txt request is generally cached for up to one day, but may be cached longer in situations where refreshing the cached version is not possible (for example, due to timeouts or 5xx errors). The cached response may be shared by different crawlers. Google may increase or decrease the cache lifetime based on max-age Cache-Control HTTP headers.

However, in reality, you might find Google still shows up the cached version of robots.txt in Google Webmaster Tools for several days even if you have changed the robots.txt. And your website will still be not indexed by Google.

How to “resubmit” robots.txt to Google Webmaster Tools?

Since Google actively discovers the robots.txt and cache it for efficiency, there is NO WAY to submit or re-submit the robots.txt. In Google’s terms, you can only wait Google to come to your website and read the new robots.txt. Oh no.

There are some “unofficial” ways to hasten the process if you wish to carry out some actions instead of sitting here waiting. The suggested list of actions can be found below, however, there are no evidence whether these will help or not:

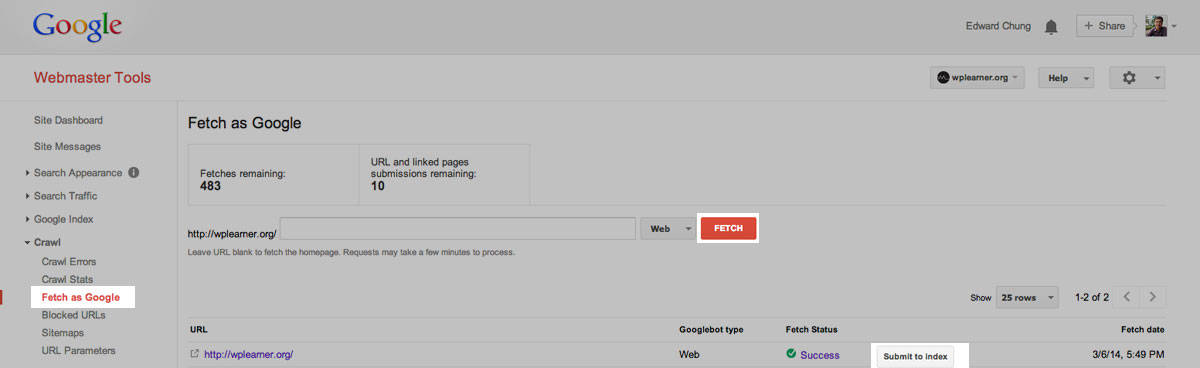

Use the “Fetch as Google” tool to 1) fetch the homepage of your website and 2) submit it to Google index. It will tell Google that you homepage can be accessed:

- Use the “Fetch as Google” tool to fetch the robots.txt of your website and submit it to Google index. It might let Google know your robots.txt has been updated.

- Resubmit the website sitemap at Crawl>Sitemap, but probably, the sitemap is blocked by the robots.txt. Try it nevertheless.

Check back the Google Webmaster Tools after several hours and you will be able to see that the updated robots.txt has been “resubmitted”. Google will now crawl the allowed portion of your website and you can check the index status with the query “site:yourdomain“.

However, if your website do not show up in the search result, then the robots.txt might not be the reason. You might need to look deeper at the technical and content errors.

Hi, my name is Edward Chung, PMP, PMI-ACP®, ITIL® Foundation. Like most of us, I am a working professional pursuing career advancements through Certifications. As I am having a full-time job and a family with 3 kids, I need to pursue professional certifications in the most effective way (i.e. with the least amount of time). I share my exam tips here in the hope of helping fellow Certification aspirants!

Hi, my name is Edward Chung, PMP, PMI-ACP®, ITIL® Foundation. Like most of us, I am a working professional pursuing career advancements through Certifications. As I am having a full-time job and a family with 3 kids, I need to pursue professional certifications in the most effective way (i.e. with the least amount of time). I share my exam tips here in the hope of helping fellow Certification aspirants!

my website too just vanished from particular keywords

Maybe need to check whether Google has taken some actions against you or your website just not rank well. Wish you good luck!